Latent Spaces: Seeing Inside an AI Brain

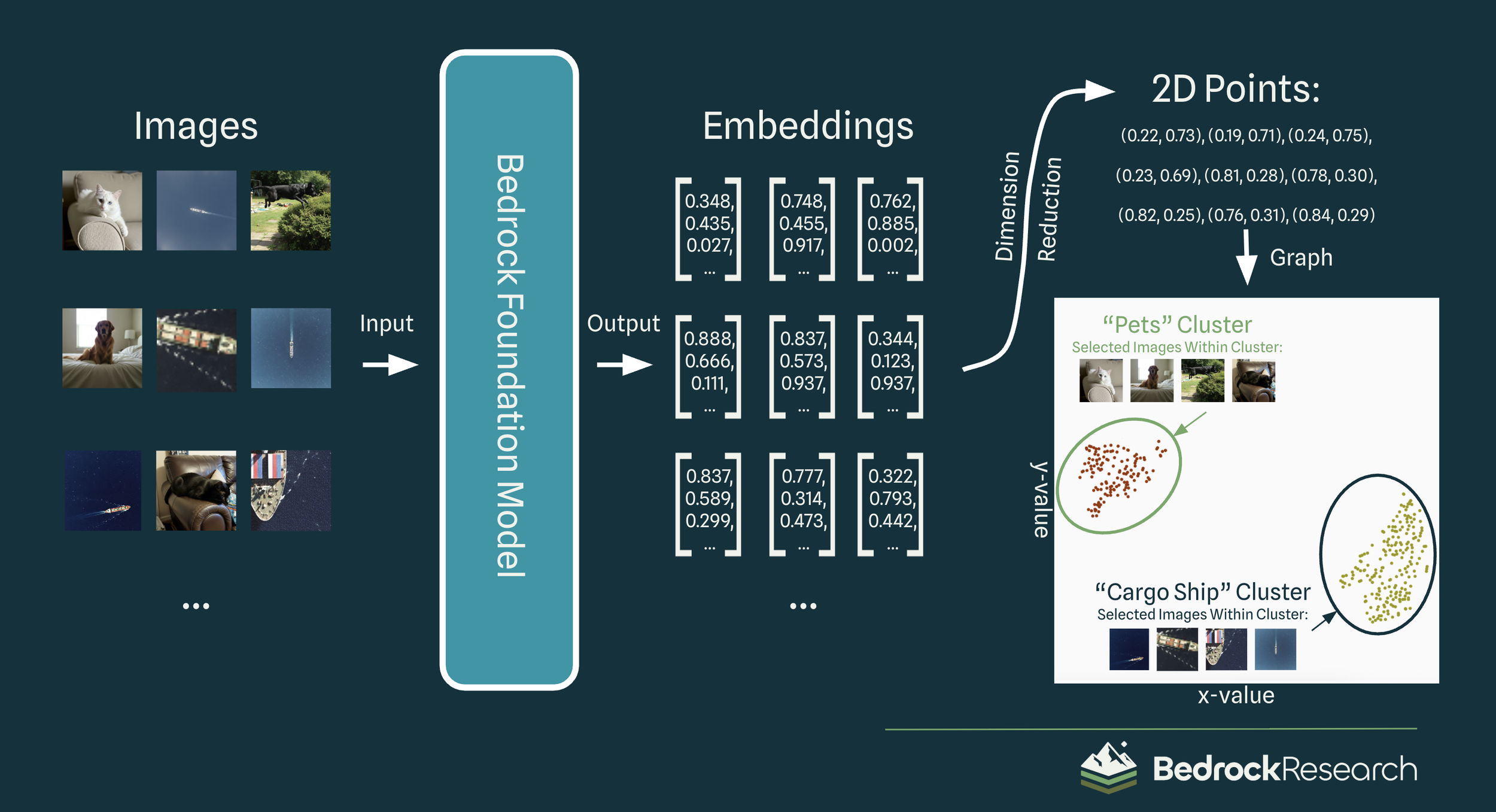

Imagine intercepting brain signals while someone looks at photos. Even without telling them what’s in the image, their neurons would “light up” in consistent ways for similar things. For instance, images of pets would trigger patterns very similar to other pets, but images of cargo ships would form a different pattern entirely.

From pixels to plots

Modern vision models do something very similar: they convert an image into an embedding, essentially a numerical fingerprint that represents the sum of the shape, texture, and context of an image.

These fingerprints bundle up many concepts or features the model learned to recognize during training; large models often track thousands of them.

Because we’re not great at imagining thousand-dimensional geometry with our 3D brains, we map these fingerprints onto a 2D or 3D canvas in a way that tries to keep nearby things nearby.

When you plot lots of these reduced, simpler fingerprints, natural groupings (clusters) pop out across large collections of images.

These clusters represent what the images are about, not just how their pixels look. The model groups images by meaning and context (“ship-ness,” “urban-ness,” “vegetation,” ...) rather than by pure color patterns. Two SAR chips of similar ships might cluster together even if one is noisy and the other is bright, while a white rooftop and a snowfield won’t, despite sharing lots of white pixels. This “meaning-first” map of the data is what people call a latent space.

While techniques for such classification have existed for decades, the key to this approach is that the model has never seen a single label. Not once has it been told that “this is an image of a pet”, instead it simply learned what features of pets make them distinct from other images. For teams drowning in overhead imagery, this matters because Bedrock can jump straight from raw scenes to actionable insights without spending time and money on a comprehensive labelling campaign.

For more information on how Bedrock’s geospatial foundation models learn without labels, see our previous blog post.

From pets to planets

Embeddings on satellite imagery are just as revealing. Plotting the latent space lets us see Bedrock’s vision models move from basic concepts—urban vs. rural—to harder tasks—classifying ship types—to very advanced inference—estimating country of origin from urban patterns in Synthetic Aperture Radar (SAR) imagery.

Our geospatial foundation models encode optical, SAR, and thermal imagery into one common latent space. This allows us to extract features and compare different types of imagery without specialized models for each data type. Therefore, our clusters can encode even the most specific concepts, like a particular class of plane, across a range of multispectral data. Our classification pipeline can keep working through clouds and night.

Beyond the pretty plots

While this latent space analysis is already proving to be useful, its true power comes when feeding these conceptual insights into further layers. To display this, let’s build an unsupervised ship detector together:

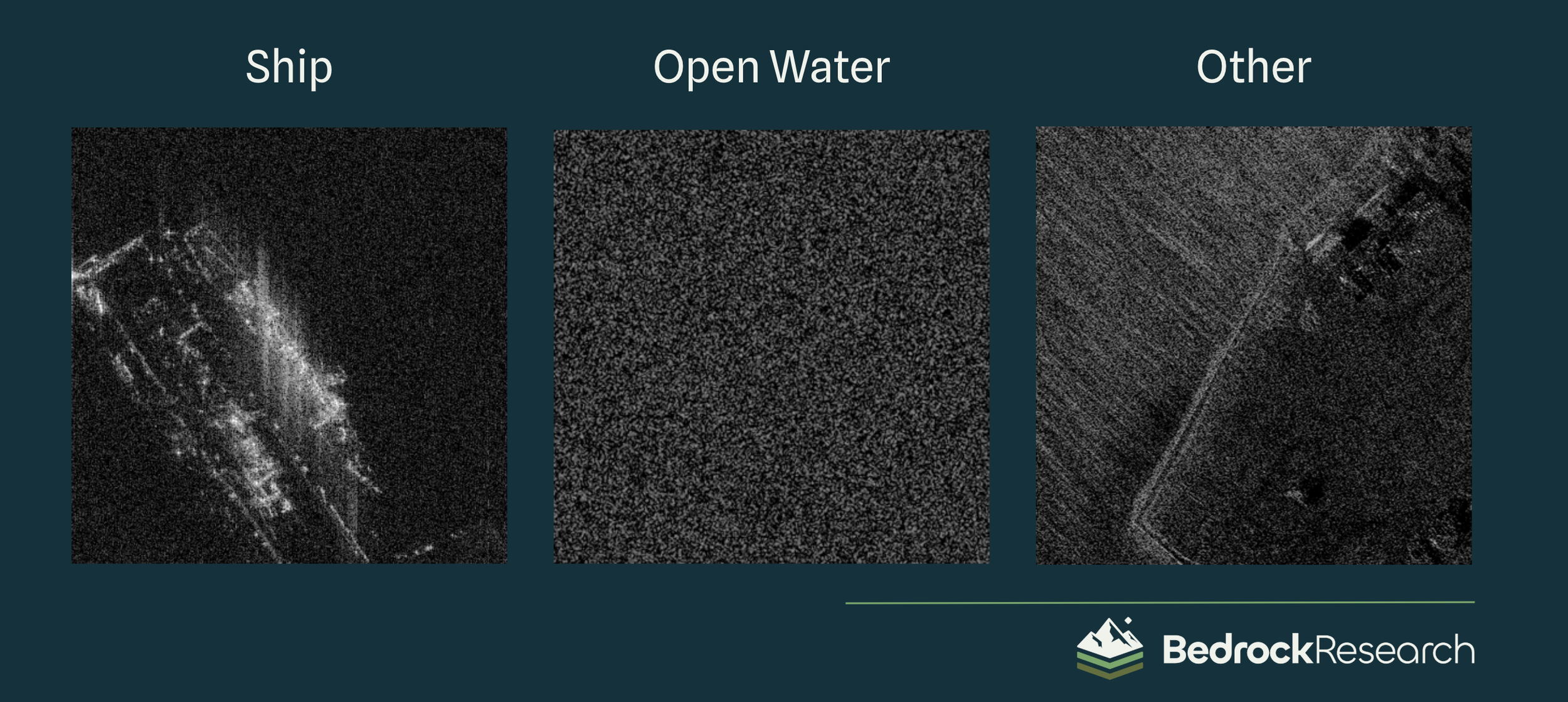

1. Collect the data

Assemble a dataset of overhead imagery representing a range of data that likely includes imagery of ships, imagery of open water, and various other imagery:

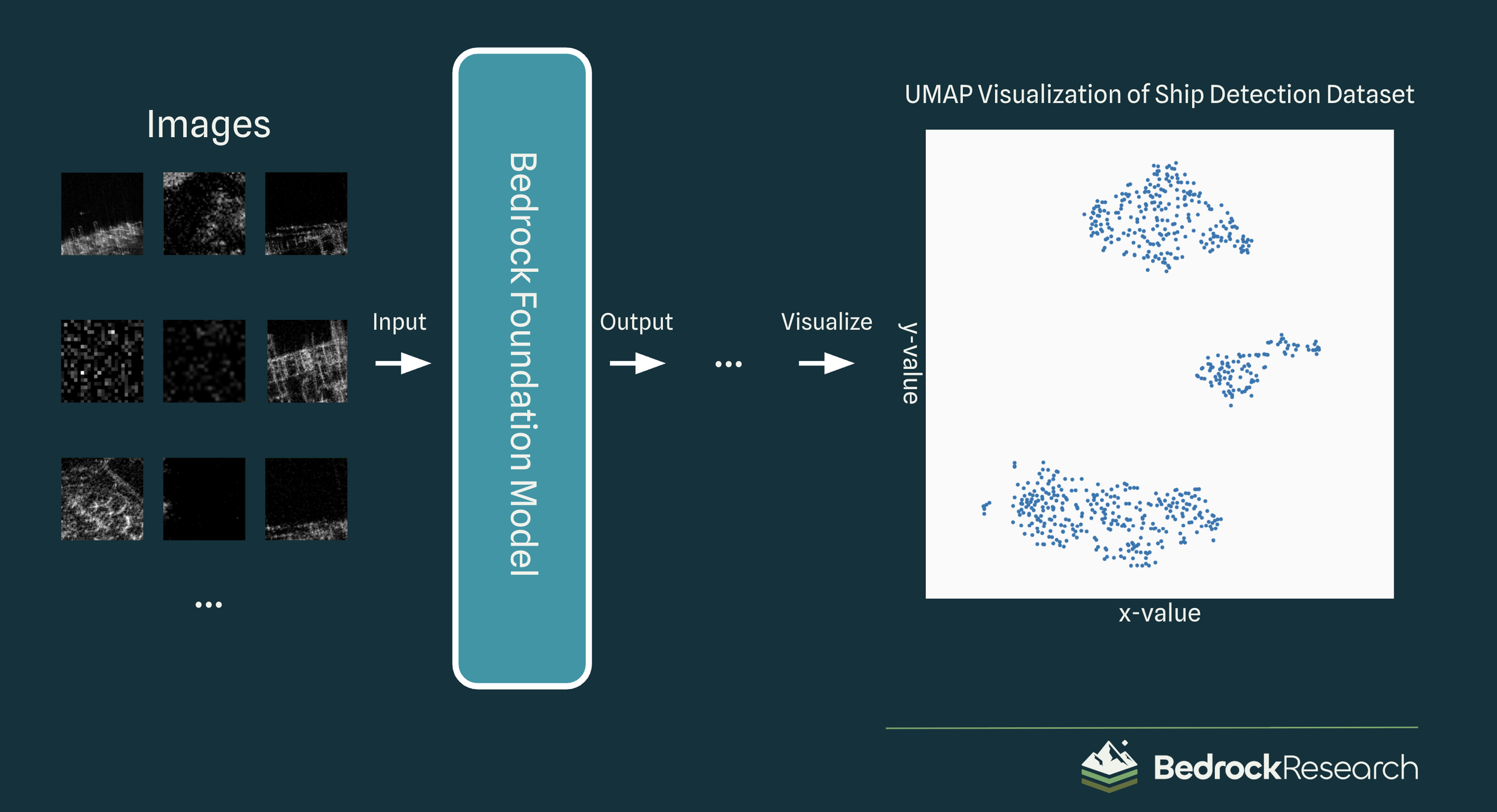

2. Embed these images

Feed the dataset into the model and generate a latent space visualization. We’ll begin to see some patterns emerge:

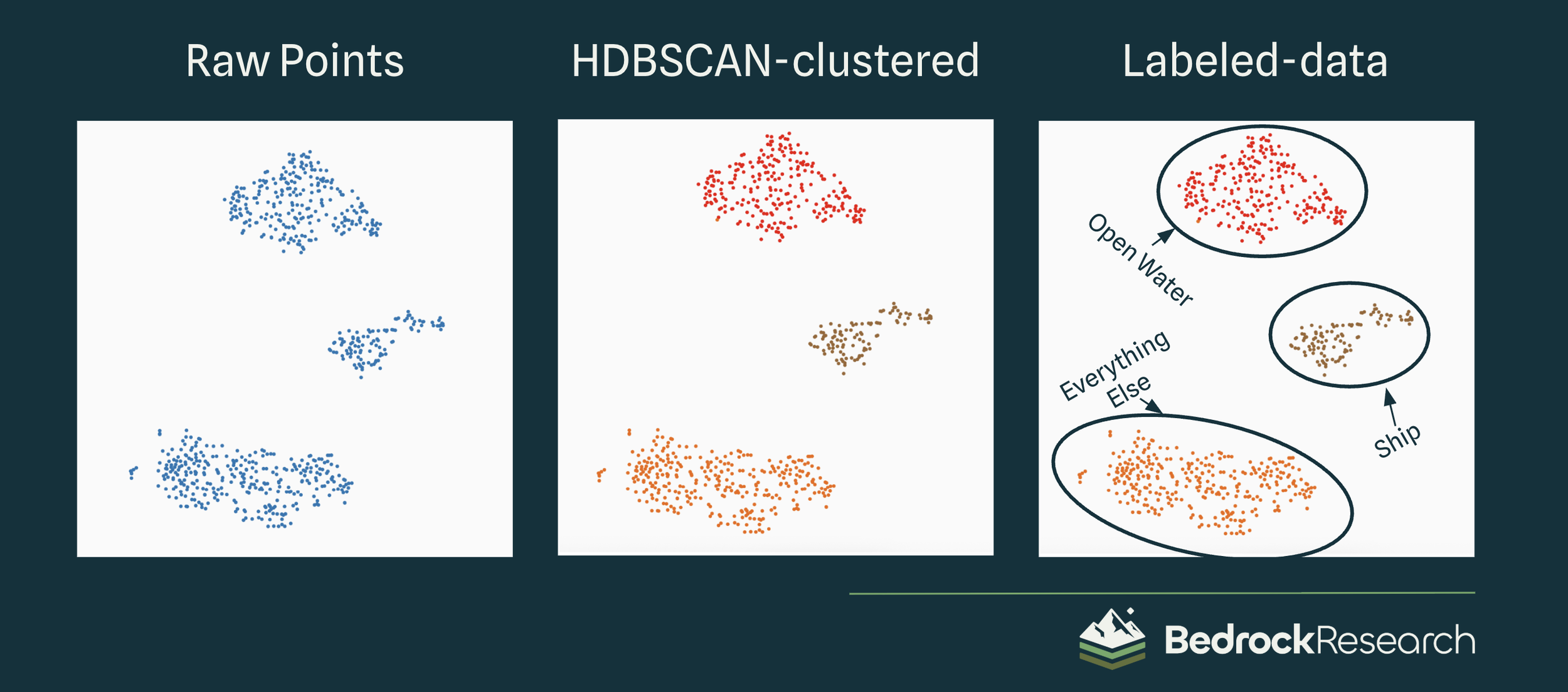

3. Evaluate latent space

Using a cluster-recognition algorithm like HDBSCAN, automatically assign each image to a certain group. Then, give each group a semantic label like “Ship,” “Open Water,” and “Other.”

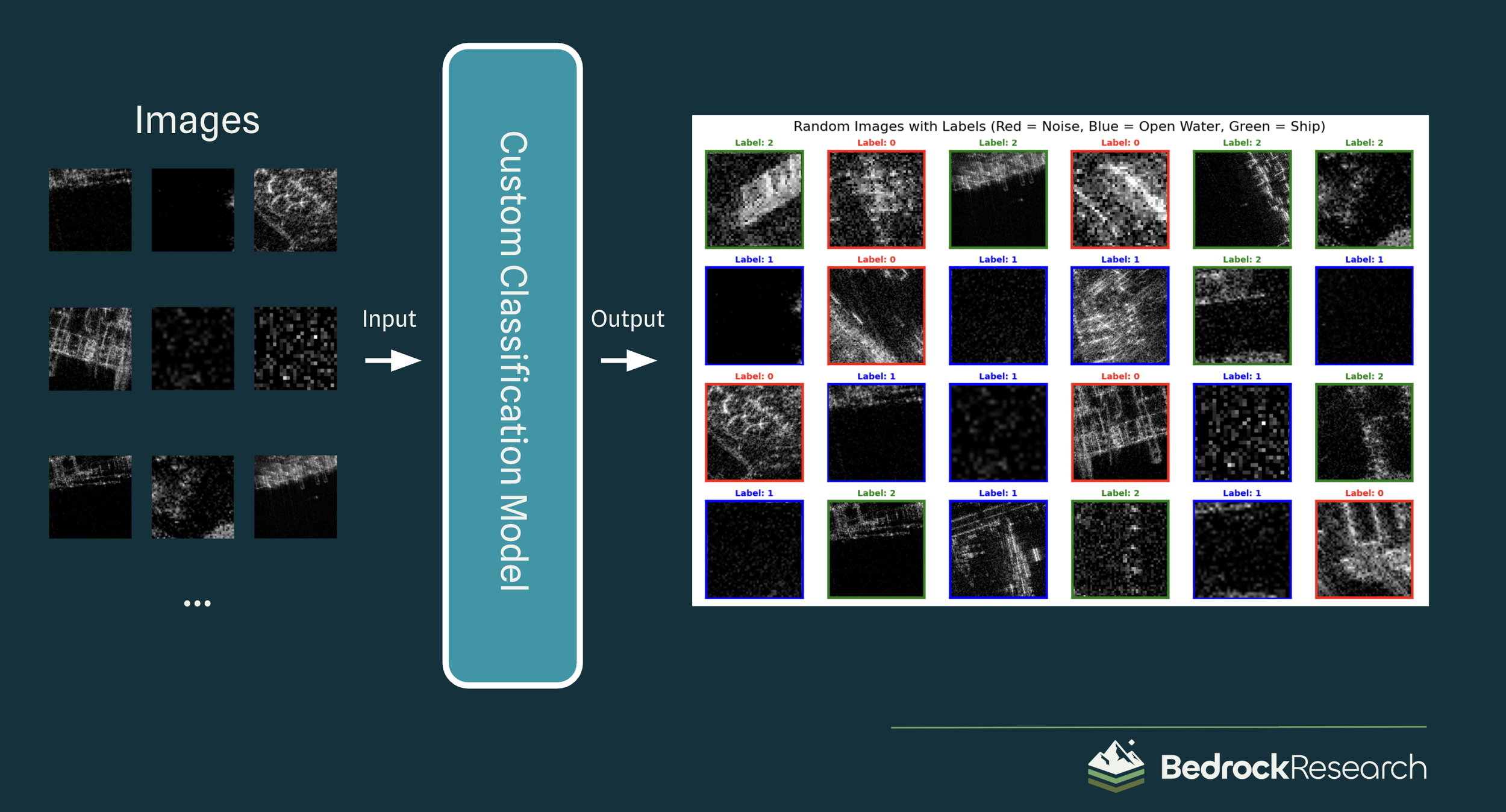

4. Create a classification model

Train a classification model on the newly-labelled data, and see our results!

As a reminder, this tool was built completely unsupervised! Aside from a quick glance required to generate the names of the labels*, we’ve assembled a ship detector purely from the context of the images themselves; no outside labels or data were required.

* The brief manual labeling step can be entirely skipped, but in that case the labels will be randomly assigned “0,” “1,” or “2” instead of “Other,” “Open Water,” and “Ship,” which isn’t very meaningful for humans.

Unlocking latent space insights with Bedrock

By creating insights from massive datasets without the need for costly manual labelling, Bedrock enables teams to move from raw data to viable products at breakneck speed. Whether it’s detecting anomalies/rare classes, classifying terrain hazards, or recognizing complex patterns across multispectral data, Bedrock’s foundation models provide the intelligence needed to push the boundaries of your geospatial analysis. Discover the hidden value in your overhead imagery with Bedrock.

Imagery Sources:

NAIP overhead imagery

AgileView ship imagery

Umbra SAR imagery

A Note About the Author, Ryan McCormick

Having a high school intern at a brand-new startup might seem unconventional, but Ryan McCormick is no conventional intern. Through outreach at his high school, Ryan connected with us and joined as part of a work-study program for school credit during his senior year. His curiosity, motivation, and impressive technical abilities were immediately clear, and he proved more productive and capable from day one than many engineers fresh out of undergrad or even master’s programs.

We were thrilled when he continued with us full time over the summer, contributing innovative capabilities that advanced our geospatial foundation models. We can’t wait to see what he accomplishes as he begins his next chapter as a freshman at Stanford this fall. From all of us at Bedrock: best of luck, Ryan, you’ve set the bar high and we’re cheering you on!

—Matt Reisman, CTO, Bedrock Research